By John Bates

Noam Chomsky has infamously stated, "There is no such thing as the probability of a sentence." For that, he is roundly mocked by computational linguists everywhere, whose statistical models have delivered tremendous development in the practical analysis of spoken and written speech.

His argument, though, is worth considering, and it is much more subtle than it sounds. "Colorless green ideas sleep furiously" is only the starting point of his critique: a sentence that is syntactically correct, and which can be assigned a (low) probability under statistical models, but which is semantically meaningless. (Of course, by virtue of its becoming a standard example in linguistics, it has become both more probable and more meaningful, although not in a way that is easily amenable to standard analysis.) Some computational linguists will argue that because that string of words is clearly possible, it is open to consideration, and that various approaches will weed out semantically meaningless cruft, much as the human mind, fundamentally a statistical engine, will puzzle over and then discard nonsense.

Chomsky's argument goes beyond the mere syntactic/semantic split, though. Computational linguists generally start with a set of utterances, text, or whole corpora, examining word and feature frequencies. By using these starting points, they build after-the-fact models of language. They can map those models to search for or summarize both syntactic and semantic features, e.g. named entity recognition, topic selection, or bias detection. They regard words and combinations of words as mere (ha!) random events, sequences of which can be assigned specific meanings. Chomsky dismisses that randomness. In his view, words have meaning. Words and sentences are created by people.

That's not to say that Noam Chomsky is some kind of starry-eyed mystic claiming special status for the human soul. Instead, his point (or rather, my interpretation of his point) is that words are objects of communication between two deliberative entities, and that the meaning of a sentence is therefore only interpretable taken in that context. As I write this sentence, it is the product of the sum total of all of my experiences and decisions up to this point, as well as the physical apparatus that I am using to deliver it. As you read it, I am making physical changes in your brain. Right now! You can't stop me! Ha!

Statistical models tend to strip away this context. His point might be better taken as something closer to: you can't predict what I'm going to say next, and I can't predict how you'll interpret what I say. Except, of course, for the context that we share. If I stand on the corner and talk about the coming apocalypse, you can probably guess quite a bit about me and my current mental state. If I babble instead about colorless green ideas, you're bound to be lost, and I cease to be communicating. Regardless, the likelihood of the words that I use has little bearing on the act of communication.

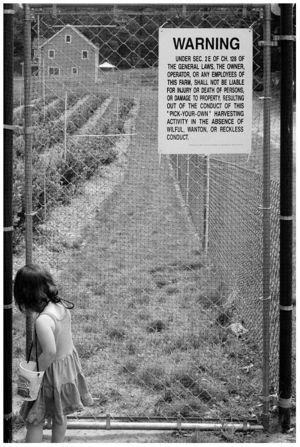

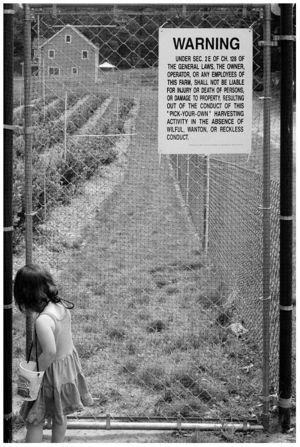

As someone who dabbles in both computational linguistics and statistical processes, I can take issue with this argument. I can point to numerous techniques that incorporate broader contexts into analyses. I can illustrate problem after problem that has fallen to our tools, and I cannot disparage anybody for attempting new challenges. But ACQUINE clearly demonstrates the shortcomings articulated by Chomsky. Photography, like writing, is fundamentally an act of communication, requiring the full context of a human's knowledge to go beyond the shallowest parse.

The frame is a perfect example. Subconsciously, we clearly do prefer images with boundaries. Consciously, or possibly semantically, we are able to eliminate them from our consideration of an image, or to view them as an extraneous feature. ACQUINE's developers must have forgotten about that, probably because they are so easily elided from our perception. But on the social networks, people posting simple snapshots rarely frame, while people who are trying to be "artists" do. Artists post in black-and-white (why? context, again...) but parents dump their flash memory cards to the web.

The frame is a perfect example. Subconsciously, we clearly do prefer images with boundaries. Consciously, or possibly semantically, we are able to eliminate them from our consideration of an image, or to view them as an extraneous feature. ACQUINE's developers must have forgotten about that, probably because they are so easily elided from our perception. But on the social networks, people posting simple snapshots rarely frame, while people who are trying to be "artists" do. Artists post in black-and-white (why? context, again...) but parents dump their flash memory cards to the web.

Obviously (at least, to me) the ACQUINE developers are not idiots. They know all this better than I do. They are attempting a grand thing: analyzing the affective perception of visual content. And there are some pretty cool refinements that are clearly possible: for example, clustering the responses of the raters can lead to social filtering, so that all of us who are Callahan fans can be distinguished from the infrared HDR cat lovers.

But "collective intelligence" and social filtering is at best a highly refined popularity contest, and fails at the one task that is most interesting: finding and evaluating truly original content. For, if it is original, it is, by definition, low probability, and also incomparable. How can you rate that which has never before been seen, if you can't understand it?

John

Send this post to a friend

Featured Comment by Seinberg: "Johns Hopkins had natural language processing (i.e. computational linguistics) talks every Tuesday this past semester. I attended many of them, as someone whose research is not in natural language processing but who takes a serious interest in it and have dabbled with it in the past. The first guest lecturer was Martin Kay, one of the fathers of computational linguistics. Someone asked him point-blank whether the standard approach taken today by the Big Names like Google and the professors at the most celebrated computational linguistics university on the planet (Hopkins) is enough to eventually pass the Turing Test. The standard approach is statistical analysis. His response was immediate and he said, 'No. I think it will be a new method not in use today.'

He did eventually back-track a bit and admit that statistical analysis is helpful for several very specifics processes. For instance, part of speech tagging, for which statistical analysis is 97 or 98% accurate given a good training corpus (far better than the majority of humans do).

Where statistical analysis falls down, however, tends to be precisely where Chomsky seems to be going with Green Photographs: understanding context. Reference resolution is still a wide open problem. If several people are discussed in previous sentences, resolving pronouns like 'he,' 'she,' 'it,' etc. are very difficult. In parsing the semantic content of sentences, these pronouns should be linked to the entities to which they refer. Humans do this automatically, but current computational methods do it poorly. Statistical analysis does this very very poorly, particularly with complicated language. The current best we can do (more or less) is 'sentiment extraction,' where analysis tries to extract small parts of the meaning to get the general idea of what people are saying e.g. in blogs. For instance, 'does this blogger support Obama or McCain?' But that's easy.

"Then there's the problem of the pink elephant in the room. Imagine the date is September 12th in 2001. Everyone who talks to each other says things like, 'How could it have happened?' 'Isn't it terrible?' 'How are you and your family?' Every person in the US—and probably most in the world—knew precisely what had happened. But a statistical parser would be at a complete loss, because it wouldn't have any context in which to understand the Pink Elephant of 9/11 that was in the air.

"So statistical analysis needs to be augmented (or: should be used to augment) some other method, and that other method needs to include knowledge in its analysis. Then, of course, statistics might be used to determine with 95% probability that on 9/12 the Pink Elephant is the events collectively known as 9/11 (or 'the terrorist attacks' or whatever).

"One final link. A professor at my university is trying to incorporate knowledge into natural language processing, although perhaps to his detriment: he uses very little statistical processing, claiming the whole venture is for the birds."

Mike comments: Where I come down on ACQUINE is that it wouldn't work even if it worked.

One thing I've been threatening to do for a while now is to take a single photograph and map different "modes of approach" to it—that is, specify some of the various ways (formalistic, coloristic, epistemological, factual, connotational, emotional, etc., etc.) different viewers might choose to think about it, giving concrete examples of each mode of approach so people might actually understand what the heck I was talking about. I might still do it, too...another of those "one day" projects. At the very least I think it might help illuminate why there can't be a single standard for aesthetics, even if we don't define aesthetics as personal to begin with—which, incidentally, I do.

Featured Comment by Paul Pomeroy: "In Claude Shannon's seminal 1948 paper, 'A Mathematical Theory of Communication,' he says up front that, 'The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem.'

I think most people overlook the full significance of what he was saying. In part, he was saying that 'information' doesn't exist in the middle. What goes across the wire is without meaning and 'meaningless information' is an oxymoron. Information, true to its -ation suffix, is a process. It's not a noun, it's a happening and for people it happens mostly under the level of consciousness.

"The history of computational intelligence is riddled with examples of seemingly brilliant people who have made the mistake of thinking the meaning was in the message. This has lead to all sorts of naive optimism and costly errors. As an example of the later, consider the 1999 Mars probe that crashed because one device was 'sending information' using imperial units (pounds) to a device that was 'receiving information' in metric units.

"My favorite example of naive optimism involves an Artificial Intelligence project funded by the military. The goal was a machine that could look at a photograph (or real-time image) and locate any hidden enemy tanks. This was to be done using neural networks which were 'trained' by showing them thousands of actual photographs some including tanks that were hidden (some not very well, others so well that experts couldn't find them) and others with no tanks at all.

"After a surprisingly short training period the neural networks suddenly started getting really good at indicating which photos had the hidden tanks. In fact, they were perfect. So the military started trying them out in real life situations and...wait for it...they were completely useless (actually worse than purely random guessing).

"It took quite awhile to finally figure out what had happened. It turns out that the original set of photos had been taken over a two day period. They first photographed locations without any tanks and then they brought in the tanks, hid them and took another set of photographs. No one took notice of the fact that on the second day the sky was overcast while it had been sunny during the first shoot. No one, that is, except for the trained neural network. So, for about a million dollars the military got a device that could tell them whether or not a photo was taken on a sunny day.

"There hasn't really been a whole lot of advances since then. And maybe I'm mistaken, but it seems to me that finding aesthetic quality in a photograph is a bit tougher. Unless, of course, it turns out that aesthetics is just a fancy word for tanks on an overcast day."

, which I totally freakin' love and which you might as well bury me with), lots of my readers go buy it. But when I recommend a random non-fiction book on a random topic, like this one

, which I found immersively fascinating and a great read, well, pretty much nobody goes and buys it. Why? Because you know my taste and opinions about photography. You give me credit for expertise, and you probably have a sense whether you are going to enjoy my recommendations or not. But you have no such faith and confidence in my general taste about items outside of my expertise, in which case I am just another guy on the internet.