By Ctein

We don't see things the way our cameras do. The qualities of human vision can be summarized by saying that we're lousy at absolutes, pretty good at relatives, and brilliant at differences.

Consider tonality. Although human vision has an extremely long "dynamic range," on the order of 10,000,000,000:1, we don't see a lot of tonal steps. That's something we can measure. Set up two equally-illuminated squares next to each other. Start turning up (or down) the brightness of one of those squares and make note of the point at which you can see the demarcation line between them. That's the smallest tonal difference you can see at that brightness level.

You can continue stepping your way up (or down) the brightness scale until you hit pitch-blackness or blinding nova-white. Count up all the steps, and that's the total number of distinct tones that you can see. For the average human eye, it's about 650. In the middle of that brightness scale we can distinguish tonal differences of a mere 1%, but towards the extremes it can require a half-stop difference in brightness for us to be able to see it. This has some interesting consequences.

First, this explains why we have trouble seeing shadow detail in prints under dim lighting but see it so clearly in the sunlight; the lower the luminance, the poorer our tonal discrimination. Conversely, in sunlight, it's hard to see the subtle tonal differences in the highlights.

Second, there is an optimum illuminance for viewing a print that will maximize the number of distinct tonal steps we can see in it. For a typical high quality photographic or inkjet print with a brightness range around 200:1 that's around 200–300 foot candles, and the maximum number of tonal steps we can see in that print is 250–300.

Third, this is why 8-bit printer output looks as good as it does. It's close to our visual capabilities. It's not really there, because the 256 gray levels in the print don't match up with the visual discrimination steps. 16-bit output would produce visibly better tonality. It would not be dramatically better.

Under many circumstances, we see far fewer tonal steps than that. As I said, we're good at differences; the human nervous system is excellent at performing differential analysis. We can pick up on that sharp boundary between two regions of slightly different brightness. Blur out the boundary and our abilities drop dramatically. Anyone who was ever tried to uniformly illuminate a backdrop by eye knows what I'm talking about. I'm certain there is nobody here who can do that to even 1/4 stop—a 20% variation. Most folks can't do it to even half a stop.

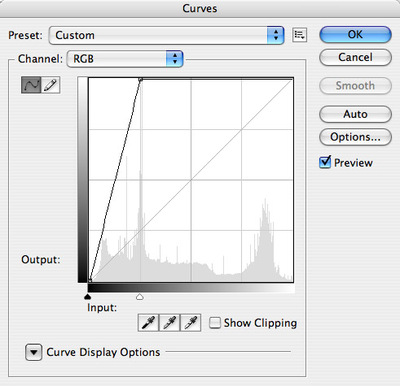

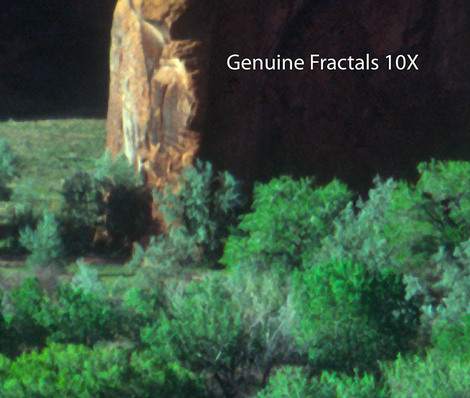

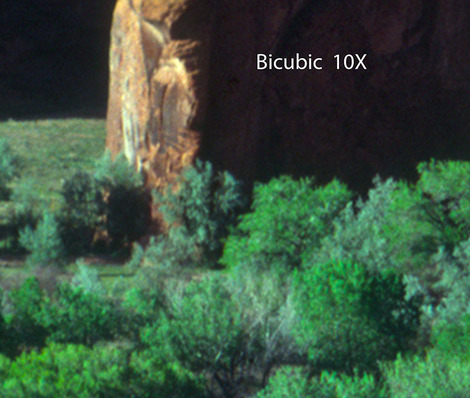

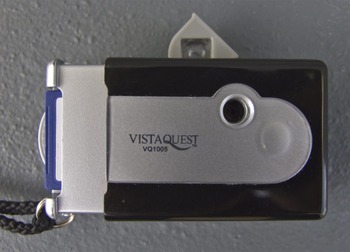

Many will see this as a uniform light gray field, but on a calibrated gamma 2.2 monitor there's about a half stop difference in brightness from the center to the edge.

Here the same brightness variation is in four well-defined steps. Now it's pretty easy to see that the brightness isn't uniform. Our vision is good at evaluating brightness differences but not absolutes.

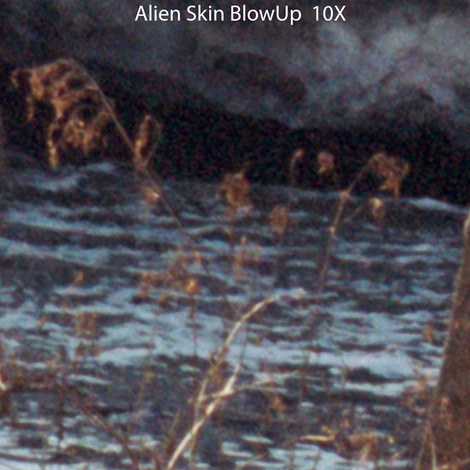

How we see fine detail is another matter; in that regards, we're better than most people realize. That's a topic for another day.

____________________

Ctein